Deep Learning - Sequence Models - Week 1

After some delay, I’m getting around to finishing the Andrew Ng series of deep learning classes. The Sequence Model class is especially interesting due to the progress being made in applying deep learning to NLP.

What is a recurrent neural network?

In The Unreasonable Effectiveness of Recurrent Neural Networks, Andrej Karpathy explains it like this:

“The core reason that recurrent nets are more exciting is that they allow us to operate over sequences of vectors: Sequences in the input, the output, or in the most general case both.”6 A basic neural network simulates some unkown function, mapping a fixed-size vector of inputs to a fixed-size vector of outputs. “If training vanilla neural nets is optimization over functions, training recurrent nets is optimization over programs.”6 RNNs accumulate state over an arbitrary length sequence of steps, enabling information from previous steps to inform output at the current step. A common example is subject-verb agreement in constructing grammatical sentences.

Another good resource is Understanding LSTM Networks by Chris Olah.

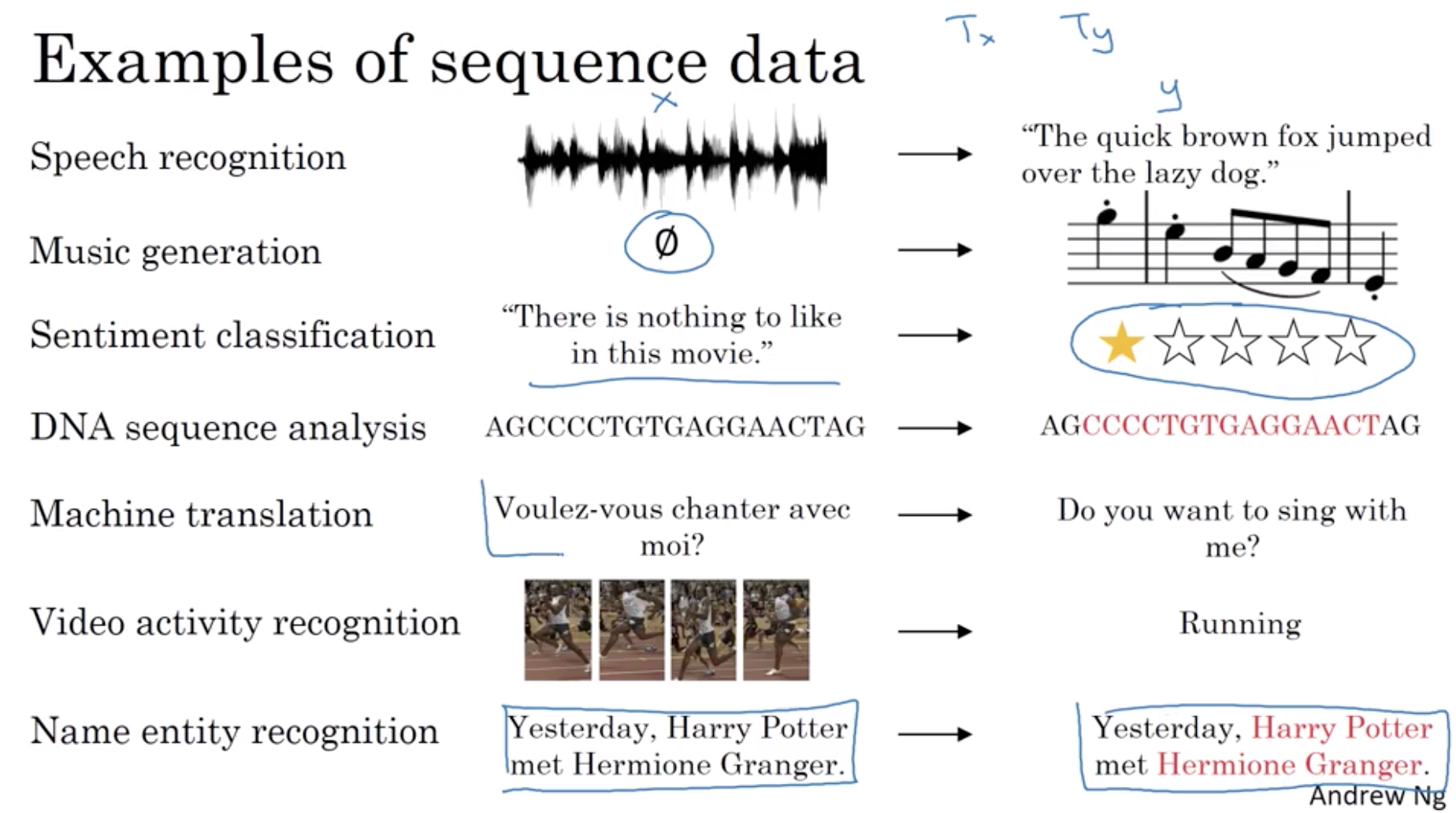

Applications of RNNs

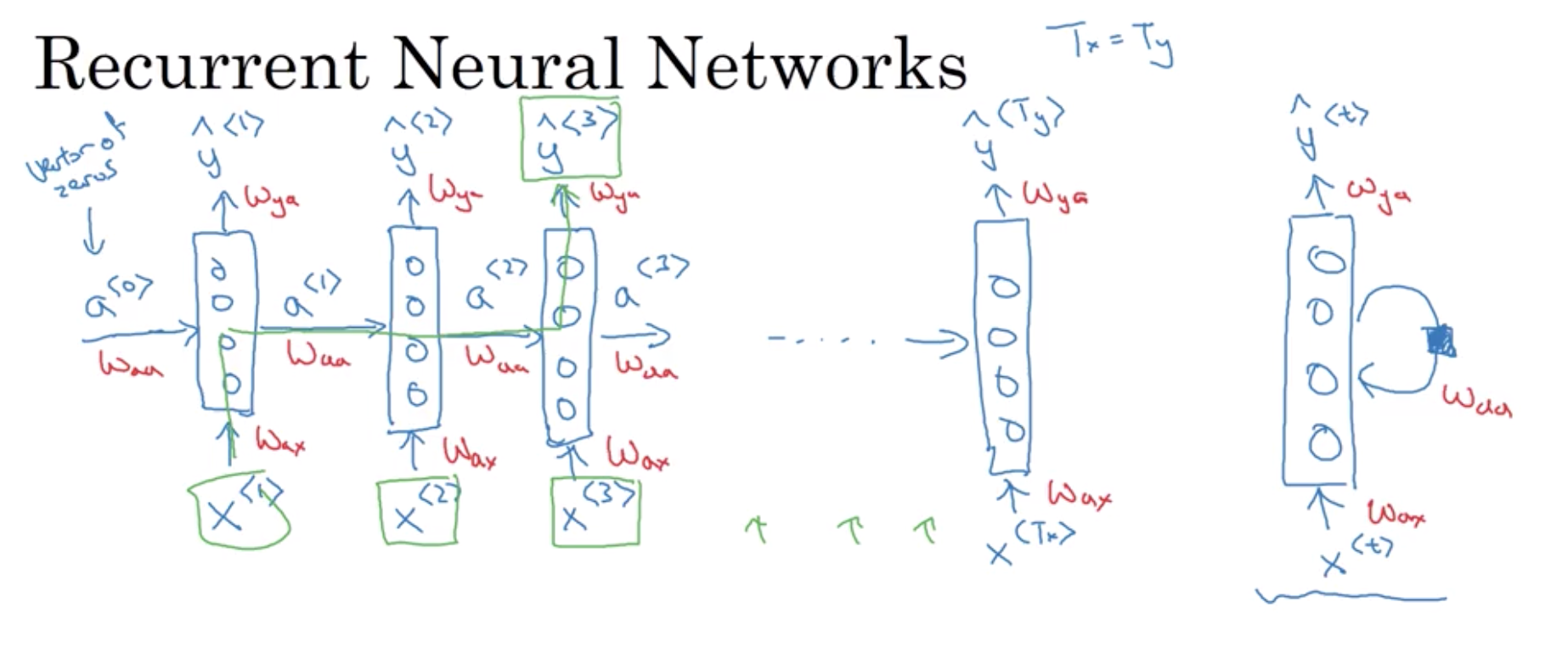

RNN Structure

An RNN unit uses both the current input, X<t> and the activations for the previous step, a<t-1> to produce the output y<t>.

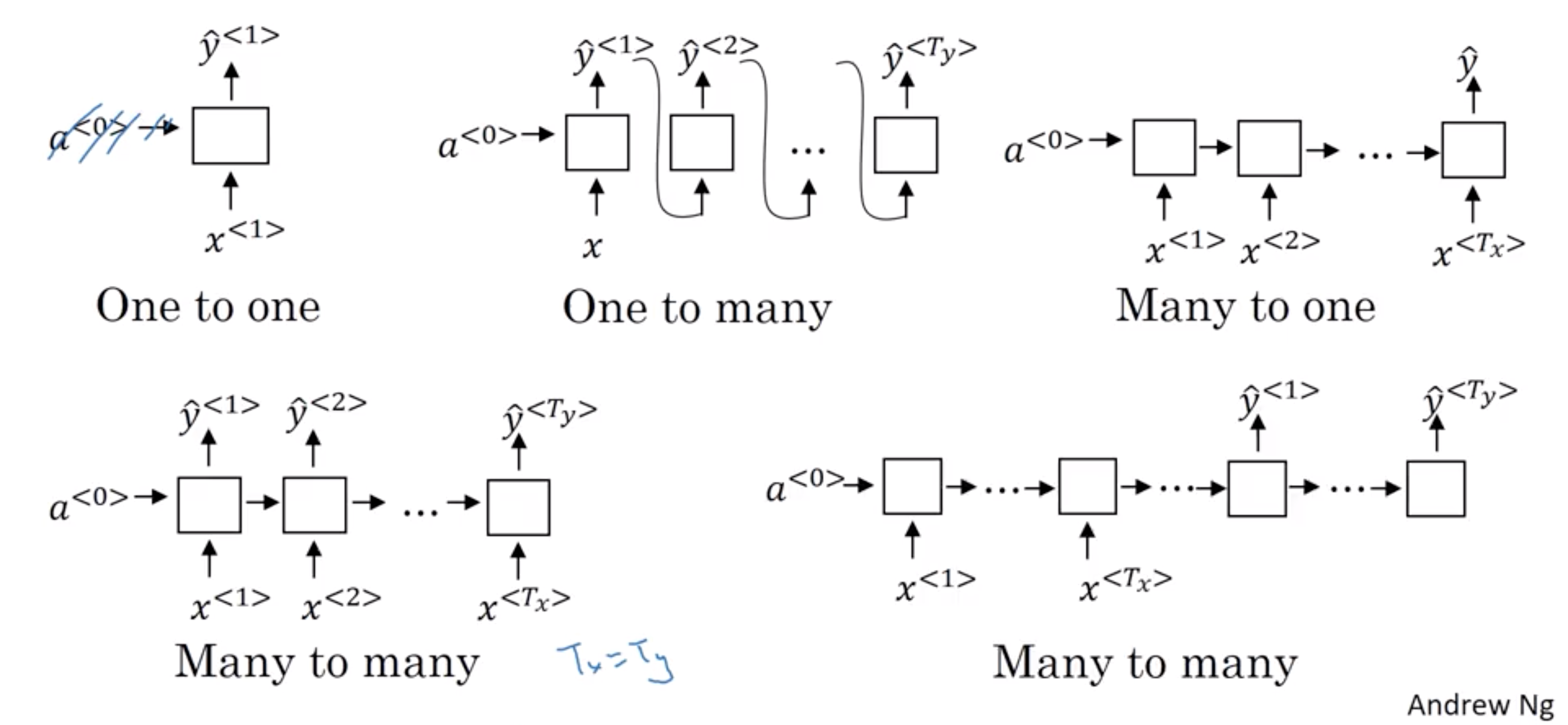

Summary of RNN Types

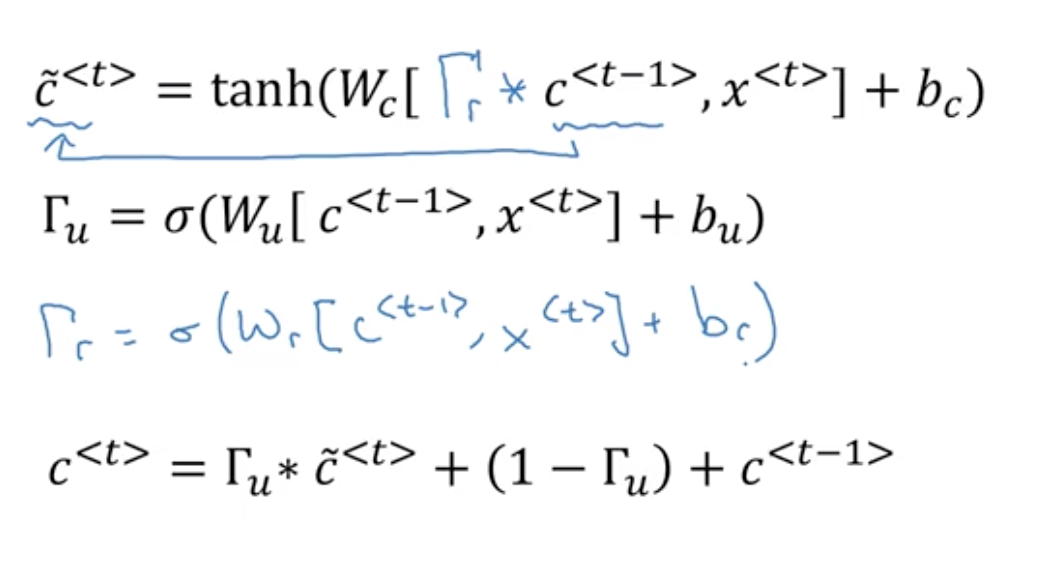

Gated recurrent unit

The gated recurrent unit is simplified relative to LSTM. It has the advantage of fewer parameters to tune, at the expense of a less expressive model. See: Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling

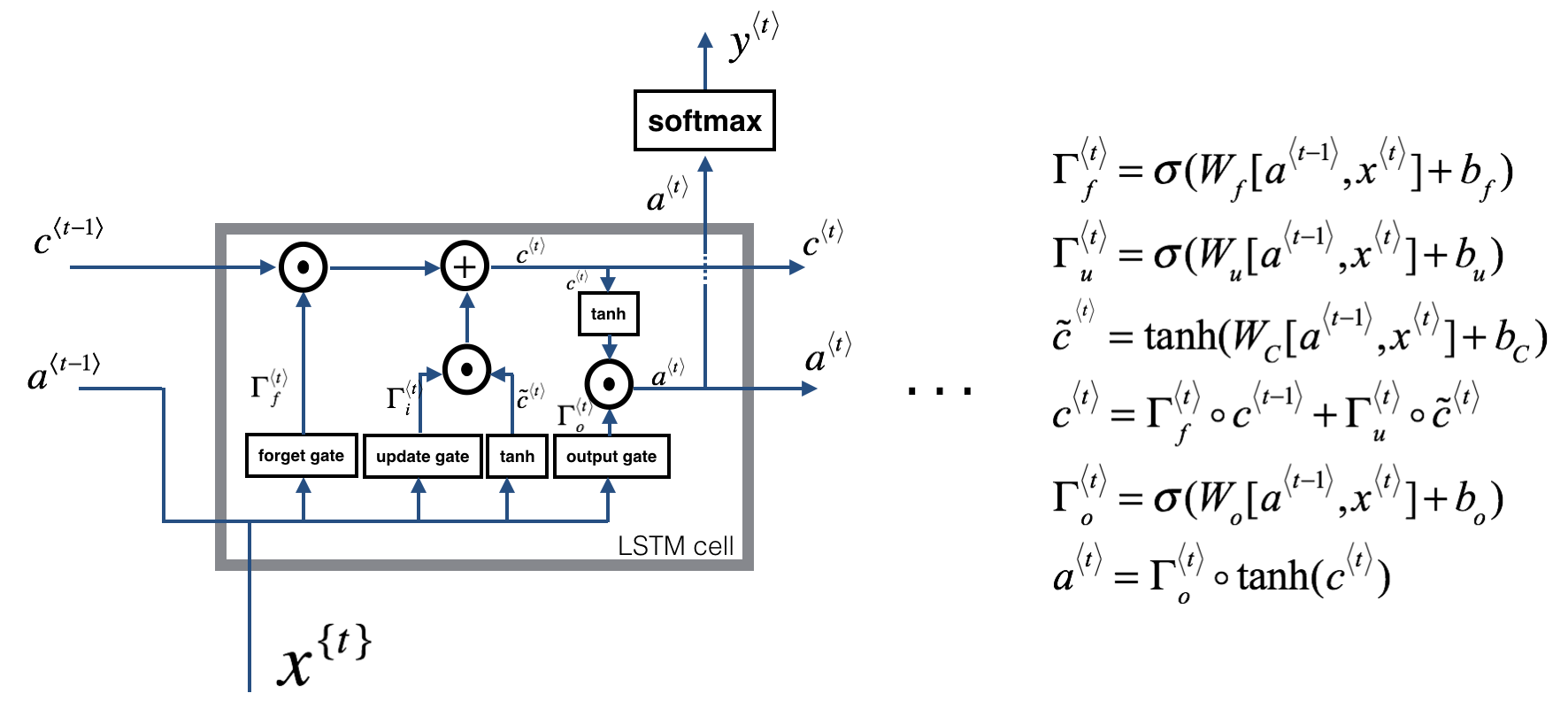

Long short-term memory

LSTM units add a bit more sophistication, taking previous activation a<t-1> and the contents of a memory cell c<t-1>, which maybe be additively updated or forgotten at each step.

Programming exercises

The 1st week of the class has 3 programming exercises. The first is the mechanics of the RNN and LSTM cells and the corresponding backpropagation. I need to work on understanding the calculus better. In the 2nd exercise, they have you train character based language models for dinosaur names and Shakespeare sonnets.

The 3rd exercise, an LSTM Jazz generator, is near and dear to my heart as a music fanatic.

References

Text generation

- Minimal character-level Vanilla RNN model. Written by Andrej Karpathy

- LSTM text generation example by the Keras team

Jazz

The ideas presented in this notebook came primarily from three computational music papers cited below. The implementation here also took significant inspiration and used many components from Ji-Sung Kim’s github repository.

- Ji-Sung Kim, 2016, deepjazz

- Jon Gillick, Kevin Tang and Robert Keller, 2009. Learning Jazz Grammars

- Robert Keller and David Morrison, 2007, A Grammatical Approach to Automatic Improvisation

- François Pachet, 1999, Surprising Harmonies